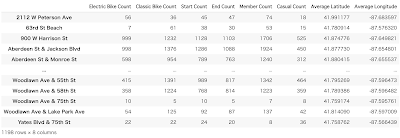

前回やった内容(https://smizunolab.blogspot.com/2024/01/chicago-divvy-bicycle-sharing-data1.html)の基本分析に加え、主成分分析とクラスタリングを行い、データの特性を明確にしていきます。時系列のデータのままやりたいところですが、分析をしやすくするために一旦集計表を作っておきます。集計表は

(ステーション名、electric_bikeの回数、classic_bikeの回数、利用回数(start)、利用回数(end)、memberの回数、casualの回数、平均緯度、平均経度)で作成してみます。

それぞれの回数はスタート地点、エンド地点の両方でカウントしています。(1) 散布図の描画と相関係数

まず項目間の関係を見るために、散布図を書いてみます。

全部で15個のグラフが描かれます。線形になっているグラフが多いです。次に相関係数を求めていきます。

これを見るとどの項目間でも相関が高い項目となっています。このまま主成分分析をやっても、主成分1に項目がまとめられてしまい、意味を成しませんが、他の項目が入ってくれば主成分分析を行うことで相関が高い項目を一つの軸としてまとめられ、多重共線性の問題を軽減することができます。多重共線性(Multicollinearity)は、統計モデリングや回帰分析において、説明変数(独立変数)同士が高い相関を持つ状況を指します。この現象が発生すると、個々の説明変数の影響を正確に推定することが難しくなり、統計モデルの解釈や信頼性に問題が生じる可能性があります。今回は主成分分析、クラスタリングとは進まずに地図表示で各ステーションがどこにあるかを確認します。

(2) 地図表示

最初に地図表示をするためのライブラリをインストールしておきます。緯度・経度は微妙にずれているので、平均を取ったもので表示します。色々な表示方法がありますが、下記は利用回数が多いステーションを濃い色で表しています。

!pip install folium

(3) ステーション間の推移回数と推移確率

ステーション間の推移回数を求めていきます。

1060 rows × 1086 columns

となっており、行と列の数が違います。つまり、出発地点、または終了地点のどちらかでしか使われていないステーションがあります。出発・終了両方に含まれているステーションだけ抽出します。出発・終了地点の両方に含まれるステーションは1003拠点となりました。さらに行和が0となっているステーションがあったので、取り除くと999拠点となりました。一番右の列は行和になります。999 rows × 1000 columnsこれを1となる推移確率に変換していきます。

この推移確率をヒートマップで表示していきます。ここから定常分布を求めていきたいところですが、この推移確率はエルゴード性を持たないことが予想されますので、一度にはできません。ここでは、推移確率の算出までに留めておきます。(3) ステーション間の平均利用時間の取得

推移確率で用いたステーションを使って、各ステーション間での平均利用時間を求めておきます。累積の利用時間、利用回数、平均利用時間を求めておきます。

これを推移確率と同じステーションを使って、平均利用時間行列を作成します。これでステーション間の移動時間を求めることができました。

.png)

.png)

.png)

0 件のコメント:

コメントを投稿